Chapter 6. WiFi

WiFi operates in the unlicensed ISM spectrum; it is trivial to deploy by anyone, anywhere; and the required hardware is simple and cheap. Not surprisingly, it has become one of the most widely deployed and popular wireless standards.

The name itself is a trademark of the WiFi Alliance, which is a trade association established to promote wireless LAN technologies, as well as to provide interoperability standards and testing. Technically, a device must be submitted to and certified by the WiFi Alliance to carry the WiFi name and logo, but in practice, the name is used to refer to any product based on the IEEE 802.11 standards.

The first 802.11 protocol was drafted in 1997, more or less as a direct adaptation of the Ethernet standard (IEEE 802.3) to the world of wireless communication. However, it wasn’t until 1999, when the 802.11b standard was introduced, that the market for WiFi devices took off. The relative simplicity of the technology, easy deployment, convenience, and the fact that it operated in the unlicensed 2.4 GHz ISM band allowed anyone to easily provide a "wireless extension" to their existing local area network. Today, most every new desktop, laptop, tablet, smartphone, and just about every other form-factor device is WiFi enabled.

From Ethernet to a Wireless LAN

The 802.11 wireless standards were primarily designed as an adaptation and an extension of the existing Ethernet (802.3) standard. Hence, while Ethernet is commonly referred to as the LAN standard, the 802.11 family (Figure 6-1) is correspondingly commonly known as the wireless LAN (WLAN). However, for the history geeks, technically much of the Ethernet protocol was inspired by the ALOHAnet protocol, which was the first public demonstration of a wireless network developed in 1971 at the University of Hawaii. In other words, we have come full circle.

The reason why this distinction is important is due to the mechanics of how the ALOHAnet, and consequently Ethernet and WiFi protocols, schedule all communication. Namely, they all treat the shared medium, regardless of whether it is a wire or the radio waves, as a "random access channel," which means that there is no central process, or scheduler, that controls who or which device is allowed to transmit data at any point in time. Instead, each device decides on its own, and all devices must work together to guarantee good shared channel performance.

The Ethernet standard has historically relied on a probabilistic carrier sense multiple access (CSMA) protocol, which is a complicated name for a simple "listen before you speak" algorithm. In brief, if you have data to send:

- Check whether anyone else is transmitting.

- If the channel is busy, listen until it is free.

- When the channel is free, transmit data immediately.

Of course, it takes time to propagate any signal; hence collisions can still occur. For this reason, the Ethernet standard also added collision detection (CSMA/CD): if a collision is detected, then both parties stop transmitting immediately and sleep for a random interval (with exponential backoff). This way, multiple competing senders won’t synchronize and restart their transmissions simultaneously.

WiFi follows a very similar but slightly different model: due to hardware limitations of the radio, it cannot detect collisions while sending data. Hence, WiFi relies on collision avoidance (CSMA/CA), where each sender attempts to avoid collisions by transmitting only when the channel is sensed to be idle, and then sends its full message frame in its entirety. Once the WiFi frame is sent, the sender waits for an explicit acknowledgment from the receiver before proceeding with the next transmission.

There are a few more details, but in a nutshell that’s all there is to it: the combination of these techniques is how both Ethernet and WiFi regulate access to the shared medium. In the case of Ethernet, the medium is a physical wire, and in the case of WiFi, it is the shared radio channel.

In practice, the probabilistic access model works very well for lightly loaded networks. In fact, we won’t show the math here, but we can prove that to get good channel utilization (minimize number of collisions), the channel load must be kept below 10%. If the load is kept low, we can get good throughput without any explicit coordination or scheduling. However, if the load increases, then the number of collisions will quickly rise, leading to unstable performance of the entire network.

If you have ever tried to use a highly loaded WiFi network, with many peers competing for access—say, at a large public event, like a conference hall—then chances are, you have firsthand experience with "unstable WiFi performance." Of course, the probabilistic scheduling is not the only factor, but it certainly plays a role.

WiFi Standards and Features

The 802.11b standard launched WiFi into everyday use, but as with any popular technology, the IEEE 802 Standards Committee has not been idle and has actively continued to release new protocols (Table 6-1) with higher throughput, better modulation techniques, multistreaming, and many other new features.

| 802.11 protocol | Release | Freq (GHz) | Bandwidth (MHz) | Data rate per stream (Mbit/s) |

b | Sep 1999 | 2.4 | 20 | 1, 2, 5.5, 11 |

g | Jun 2003 | 2.4 | 20 | 6, 9, 12, 18, 24, 36, 48, 54 |

n | Oct 2009 | 2.4 | 20 | 7.2, 14.4, 21.7, 28.9, 43.3, 57.8, 65, 72.2 |

n | Oct 2009 | 5 | 40 | 15, 30, 45, 60, 90, 120, 135, 150 |

ac | ~2014 | 5 | 20, 40, 80, 160 | up to 866.7 |

Today, the "b" and "g" standards are the most widely deployed and supported. Both utilize the unlicensed 2.4 GHz ISM band, use 20 MHz of bandwidth, and support at most one radio data stream. Depending on your local regulations, the transmit power is also likely fixed at a maximum of 200 mW. Some routers will allow you to adjust this value but will likely override it with a regional maximum.

So how do we increase performance of our future WiFi networks? The "n" and upcoming "ac" standards are doubling the bandwidth from 20 to 40 MHz per channel, using higher-order modulation, and adding multiple radios to transmit multiple streams in parallel—multiple-input and multiple-output (MIMO). All combined, and in ideal conditions, this should enable gigabit-plus throughput with the upcoming "ac" wireless standard.

Measuring and Optimizing WiFi Performance

By this point you should be skeptical of the notion of "ideal conditions," and for good reason. The wide adoption and popularity of WiFi networks also created one of its biggest performance challenges: inter- and intra-cell interference. The WiFi standard does not have any central scheduler, which also means that there are no guarantees on throughput or latency for any client.

The new WiFi Multimedia (WMM) extension enables basic Quality of Service (QoS) within the radio interface for latency-sensitive applications (e.g., voice, video, best effort), but few routers, and even fewer deployed clients, are aware of it. In the meantime, all traffic both within your own network, and in nearby WiFi networks must compete for access for the same shared radio resource.

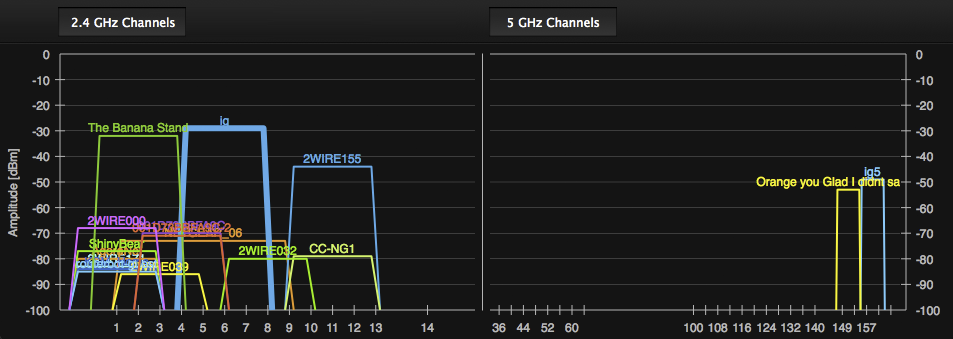

Your router may allow you to set some Quality of Service (QoS) policy for clients within your own network (e.g., maximum total data rate per client, or by type of traffic), but you nonetheless have no control over traffic generated by other, nearby WiFi networks. The fact that WiFi networks are so easy to deploy is what made them ubiquitous, but the widespread adoption has also created a lot of performance problems: in practice it is now not unusual to find several dozen different and overlapping WiFi networks (Figure 6-2) in any high-density urban or office environment.

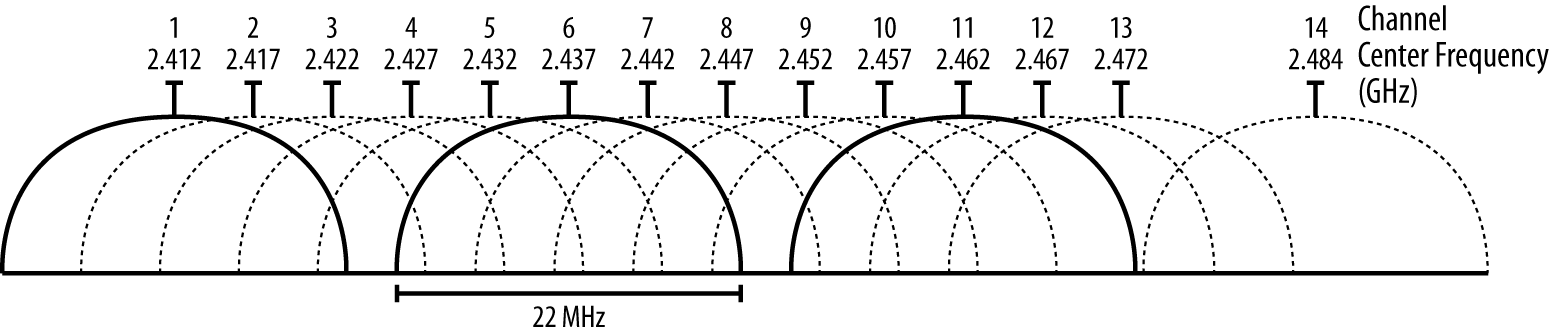

The most widely used 2.4 GHz band provides three non-overlapping 20 MHz radio channels: 1, 6, and 11 (Figure 6-3). Although even this assignment is not consistent among all countries. In some, you may be allowed to use higher channels (13, 14), and in others you may be effectively limited to an even smaller subset. However, regardless of local regulations, what this effectively means is that the moment you have more than two or three nearby WiFi networks, some must overlap and hence compete for the same shared bandwidth in the same frequency ranges.

Your 802.11g client and router may be capable of reaching 54 Mbps, but the moment your neighbor, who is occupying the same WiFi channel, starts streaming an HD video over WiFi, your bandwidth is cut in half, or worse. Your access point has no say in this arrangement, and that is a feature, not a bug!

Unfortunately, latency performance fares no better. There are no guarantees for the latency of the first hop between your client and the WiFi access point. In environments with many overlapping networks, you should not be surprised to see high variability, measured in tens and even hundreds of milliseconds for the first wireless hop. You are competing for access to a shared channel with every other wireless peer.

The good news is, if you are an early adopter, then there is a good chance that you can significantly improve performance of your own WiFi network. The 5 GHz band, used by the new 802.11n and 802.11ac standards, offers both a much wider frequency range and is still largely interference free in most environments. That is, at least for the moment, and assuming you don’t have too many tech-savvy friends nearby, like yourself! A dual-band router, which is capable of transmitting both on the 2.4 GHz and the 5 GHz bands will likely offer the best of both worlds: compatibility with old clients limited to 2.4 GHz, and much better performance for any client on the 5 GHz band.

Putting it all together, what does this tell us about the performance of WiFi?

- WiFi provides no bandwidth or latency guarantees or assignment to its users.

- WiFi provides variable bandwidth based on signal-to-noise in its environment.

- WiFi transmit power is limited to 200 mW, and likely less in your region.

- WiFi has a limited amount of spectrum in 2.4 GHz and the newer 5 GHz bands.

- WiFi access points overlap in their channel assignment by design.

- WiFi access points and peers compete for access to the same radio channel.

There is no such thing as "typical" WiFi performance. The operating range will vary based on the standard, location of the user, used devices, and the local radio environment. If you are lucky, and you are the only WiFi user, then you can expect high throughput, low latency, and low variability in both. But once you are competing for access with other peers, or nearby WiFi networks, then all bets are off—expect high variability for latency and bandwidth.

Packet Loss in WiFi Networks

The probabilistic scheduling of WiFi transmissions can result in a high number of collisions between multiple wireless peers in the area. However, even if that is the case, this does not necessarily translate to higher amounts of observed TCP packet loss. The data and physical layer implementations of all WiFi protocols have their own retransmission and error correction mechanisms, which hide these wireless collisions from higher layers of the networking stack.

In other words, while TCP packet loss is definitely a concern for data delivered over WiFi, the absolute rate observed by TCP is often no higher than that of most wired networks. Instead of direct TCP packet loss, you are much more likely to see higher variability in packet arrival times due to the underlying collisions and retransmissions performed by the lower link and physical layers.

Optimizing for WiFi Networks

The preceding performance characteristics of WiFi may paint an overly stark picture against it. In practice, it seems to work "well enough" in most cases, and the simple convenience that WiFi enables is hard to beat. In fact, you are now more likely to have a device that requires an extra peripheral to get an Ethernet jack for a wired connection than to find a computer, smartphone, or tablet that is not WiFi enabled.

With that in mind, it is worth considering whether your application can benefit from knowing about and optimizing for WiFi networks.

Leverage Unmetered Bandwidth

In practice, a WiFi network is usually an extension to a wired LAN, which is in turn connected via DSL, cable, or fiber to the wide area network. For an average user in the U.S., this translates to 8.6 Mbps of edge bandwidth and a 3.1 Mbps global average (Table 1-2). In other words, most WiFi clients are still likely to be limited by the available WAN bandwidth, not by WiFi itself. That is, when the "radio network weather" is nice!

However, bandwidth bottlenecks aside, this also frequently means that a typical WiFi deployment is backed by an unmetered WAN connection—or, at the very least, a connection with much higher data caps and maximum throughput. While many users may be sensitive to large downloads over their 3G or 4G connections due to associated costs and bandwidth caps, frequently this is not as much of a concern when on WiFi.

Of course, the unmetered assumption is not true in all cases (e.g., a WiFi tethered device backed by a 3G or 4G connection), but in practice it holds true more often than not. Consequently, large downloads, updates, and streaming use cases are best done over WiFi when possible. Don’t be afraid to prompt the user to switch to WiFi on such occasions!

Adapt to Variable Bandwidth

As we saw, WiFi provides no bandwidth or latency guarantees. The user’s router may have some application-level QoS policies, which may provide a degree of fairness to multiple peers on the same wireless network. However, the WiFi radio interface itself has very limited support for QoS. Worse, there are no QoS policies between multiple, overlapping WiFi networks.

As a result, the available bandwidth allocation may change dramatically, on a second-to-second basis, based on small changes in location, activity of nearby wireless peers, and the general radio environment.

As an example, an HD video stream may require several megabits per second of bandwidth (Table 6-3), and while most WiFi standards are sufficient in ideal conditions, in practice you should not be surprised to see, and should anticipate, intermittent drops in throughput. In fact, due to the dynamic nature of available bandwidth, you cannot and should not extrapolate past download bitrate too far into the future. Testing for bandwidth rate just at the beginning of the video will likely result in intermittent buffering pauses as the radio conditions change during playback.

| Container | Video resolution | Encoding | Video bitrate (Mbit/s) |

mp4 | 360p | H.264 | 0.5 |

mp4 | 480p | H.264 | 1–1.5 |

mp4 | 720p | H.264 | 2–2.9 |

mp4 | 1080p | H.264 | 3–4.3 |

Instead, while we can’t predict the available bandwidth, we can and should adapt based on continuous measurement through techniques such as adaptive bitrate streaming.

Adapt to Variable Latency

Just as there are no bandwidth guarantees when on WiFi, similarly, there are no guarantees on the latency of the first wireless hop. Further, things get only more unpredictable if multiple wireless hops are needed, such as in the case when a wireless bridge (relay) access point is used.

In the ideal case, when there is minimum interference and the network is not loaded, the wireless hop can take less than one millisecond with very low variability. However, in practice, in high-density urban and office environments the presence of dozens of competing WiFi access points and peers creates a lot of contention for the same radio frequencies. As a result, you should not be surprised to see a 1–10 millisecond median for the first wireless hop, with a long latency tail: expect an occasional 10–50 millisecond delay and, in the worst case, even as high as hundreds of milliseconds.

If your application is latency sensitive, then you may need to think carefully about adapting its behavior when running over a WiFi network. In fact, this may be a good reason to consider WebRTC, which offers the option of an unreliable UDP transport. Of course, switching transports won’t fix the radio network, but it can help lower the protocol and application induced latency overhead.